If you believe AI proponents like Nvidia head honcho Jensen Huang, data centres will become newfangled “AI factories” that pump out “intelligence” or insights to help people run their everyday lives, say, learn skills faster or run a business more efficiently.

Instead of making shoes, generating electricity or sending Internet data to you, such AI factories will deliver a much-needed “intelligence” that changes everything, from powering a chatbot to a physical robot in future.

And like any factory, these AI factories need a proper bunch of machines to produce this intelligence. This is where Nvidia’s much-sought-after chips dominate the headlines and even engender great-power rivalry today.

At the Computex show this week, we got a close look at some Nvidia-powered AI servers that are doing all the heavy lifting to make AI possible.

These tasks include training large language models (so they become smart), inferencing from the trained knowledge (to sound smart in a response) and developing an an app or service (to use AI for real, such as in scientific research).

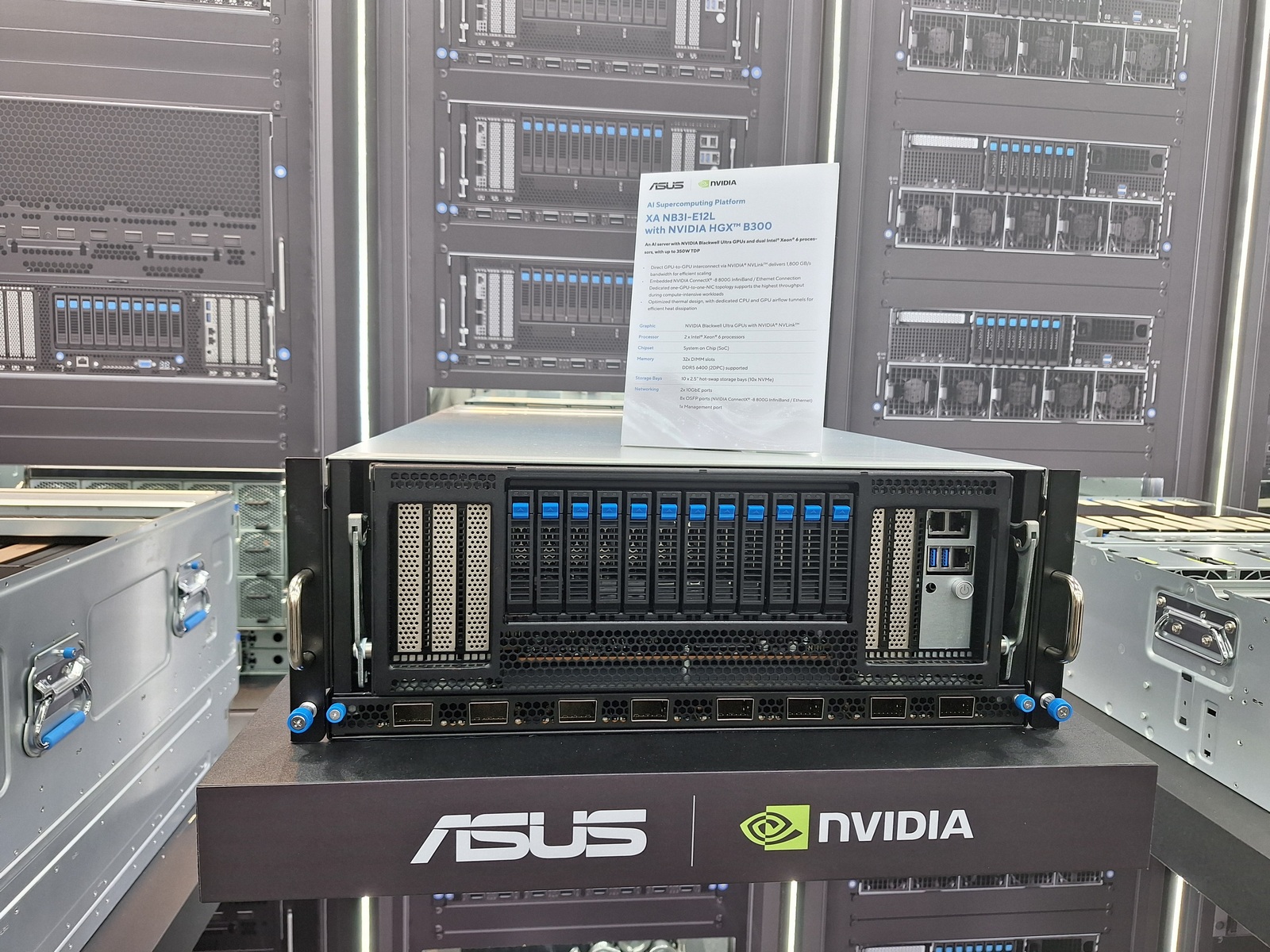

Asus, one of Nvidia’s many partners that make the AI machines using its graphics processing units (GPUs), showed us a few interesting things in Taipei:

1. AI servers are super dense

Just a few years ago, a typical rack of servers in a data centre usually had a capacity of under 10kW. This was because they typically ran “simple” tasks such as websites, office productivity or payroll and accounting. These tasks have been well optimised over time.

Then came AI. To train an AI model, it takes a tremendous amount of computing power, say, to learn the patterns and recognise and differentiate one thing from another. The size of the data sets involved matter, too. Today, an AI data centre rack can be so densely packed with computing power that its capacity exceeds 100kW.

At the Asus booth at Computex, for example, we saw an Nvidia H200-based setup that sucks up to 74.4kW – that’s from eight nodes each drawing 9.3kW.

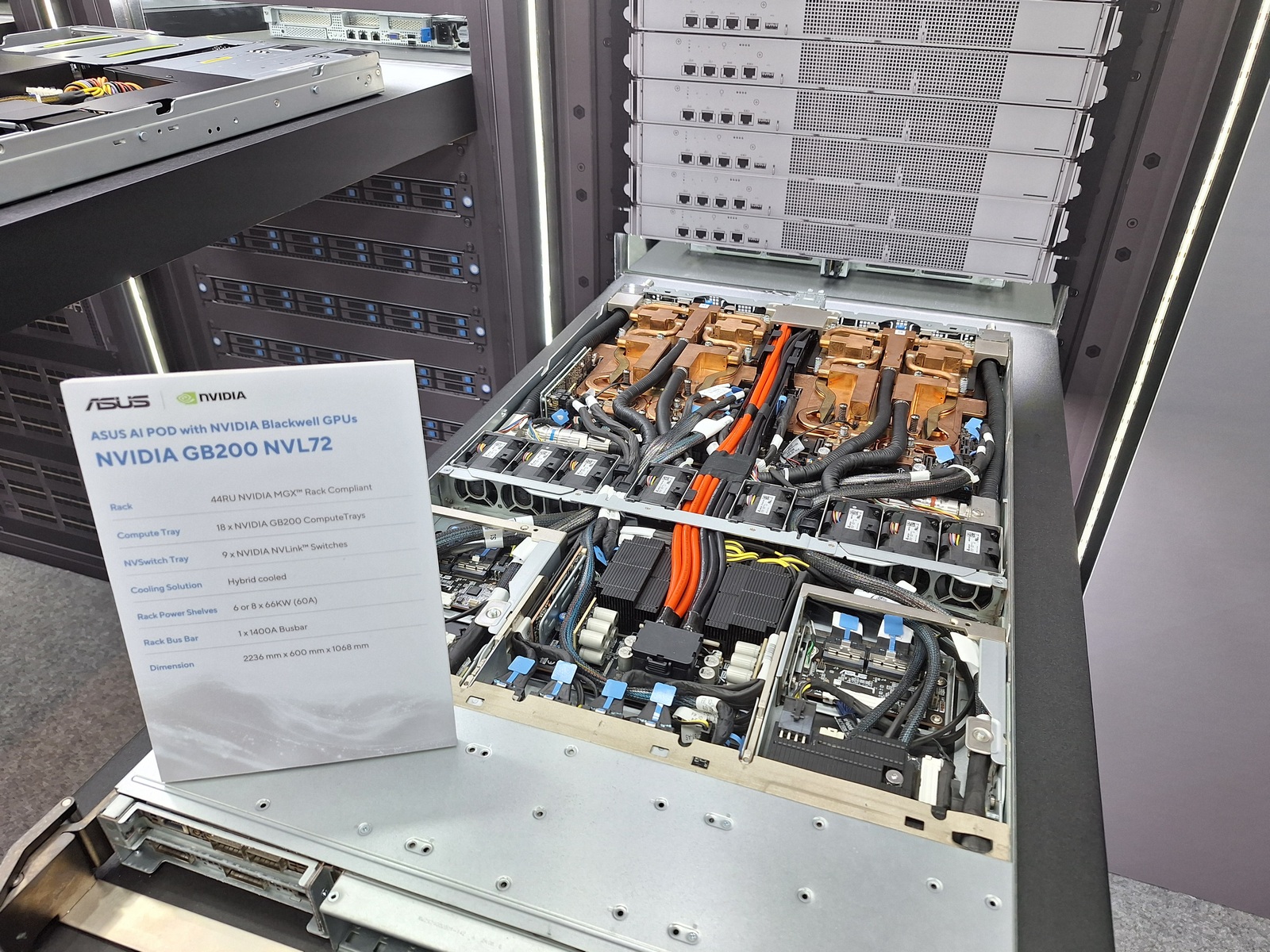

It’s not even Nvidia’s most powerful machine – the latest GB200- and GB300-based servers, which Asus also sells in a rack called AI Pod, are even more advanced. Needless to say, these machines pack even more compute in such a small enclosure that’s slotted into standard data centre racks.

2. Water cooling is preferred

The added computer power in a small enclosed space means these machines run very hot. Fans are no longer efficient enough and many data centre operators have turned to liquid cooling. It helps that liquid is a better conductor of heat than air – you spend less energy transferring that heat away.

Asus’ GB200- and GB300-based AI servers, for example, come in both air and liquid cooling options. For liquid cooling, a copper heat pipe takes the heat away from the hot-running chips. It is cooled down further by fans before the coolant is returned to cool down the chip again.

Another even more exotic form of liquid cooling is immersion cooling. This means sliding entire electronic components – usually a chip and motherboard – into a non-conductive liquid to cool it down.

As you’d imagine, this is an even more efficient cooling method, though it’s also harder to follow. Asus doesn’t make immersion cooling kits but data centre equipment makers such as Vertiv do.

It costs extra money to set up these large tanks of liquid and you still need to reconfigure a data centre’s server halls – for one, you don’t need so many fans now.

And it’s somewhat ironic that liquid cooling – including immersion cooling – has long been a hobbyist pursuit for PC enthusiasts, who today have their own closed-loop liquid coolers for their CPUs.

Notably, in another hall in Computex this week, an even more exotic method – liquid nitrogen from large tanks – was used to cool down CPUs in a contest to see who could push their PCs to extremes.

3. They scale out and scale up

Okay, this is a little bit of Nvidia marketing-speak but it speaks to the different needs of businesses today. Depending on the AI capabilities they wish to focus on, they can either scale out with more and smaller Nvidia-based machines or scale up with more powerful Nvidia-based machines.

At the extreme end, Asus’ AI Pod comes in a entire rack that can fit 72 Nvidia Blackwell Ultra GPUs and 36 Grace CPUs. You can train complex AI models with 1 trillion parameters, the upper end of such activities today.

To “build up” to these capabilities, you’d need deep pockets, of course. Or buy time or tokens on one of these machines run by a hyperscale cloud provider.

At the slightly lower end, Asus also offers smaller servers that pack in older but still powerful Nvidia H200 GPUs or the new RTX Pro 6000 Blackwell Server Edition chips that promise to great AI inference performance for AI agents.

These servers help to “scale out” AI capabilities by bringing them to more parts of the enterprise, enabling employees to take on the computing power in an AI factory to, say, generate images faster or deliver customer answers more coherently.

4. AI can be run on small machines

Don’t think everything AI needs to be run in data centre. Now, Nvidia and many of its technology partners even have lunchbox-sized PCs that deliver enough horsepower to test-drive, say, your latest research without having to go to the cloud, set things up, test and then scrub the data for security.

The Ascent GX10 from Asus, for example, packs an Nvidia GB10 Grace Blackwell GPU capable of prototyping, fine-tuning, and inferring AI models with up to 200 billion parameters. And it is not much bigger than a mini PC.

A lesson from Sun Microsystems

It’s true Nvidia isn’t the only game in town when it comes to AI – AMD and Intel both offer alternatives, plus there are ways to use AI accelerators instead of full-fledged Nvidia GPUs for some tasks that don’t need the extreme performance.

How Nvidia has locked up the market is with its popular CUDA software. It enables developers to get up to speed with deep learning and other tasks with a ready-made set of tools.

Another factor is Nvidia’s high-speed NVLink connections between the various components in an AI server, which not only speed things up but also help join together multiple GPUs (like in the Asus AI Pod) to deliver a lot more performance.

Yet, Nvidia should take a lesson from Sun Microsystems? Who? Yes, the company that once called itself the dot in dotcom in the late 1990s and early 2000s, because it was the only one who could make servers powerful enough to enable large-scale websites for e-commerce.

Unfortunately, people later found a way to link up multiple slower but cheaper Intel servers to deliver good performance that rivalled the specialised and costly Sun machines.

This was the seed need to grow cloud computing to what it is today. It was built on such “commodity” servers from Intel and AMD. They replaced larger monolithic ones by Sun, which got sold to Oracle in 2010.

Just like Sun, Nvidia is selling the picks and shovels in yet another gold rush about 20 years later. It’s the biggest player in town, as Sun was. Now, the question is whether it can retain its lead by selling a vision of AI factories that depend on its chips deliver the gold.