Kicking off each year in early January, the CES show in Las Vegas has always been a useful barometer of where technology is headed. This year’s message was unusually clear: AI is the new tech infrastructure, at the forefront of industry and business.

The urgency is for enterprises to understand that digitalisation is now about AI transformation. The time for AI experiments and pilots is over.

Across the keynote speeches and exhibits at CES which ended last week, it was clear that AI is in everything and is now the new compute platform, no longer a technical footnote. It now shapes cost structures, vendor dependence, security posture and even organisational skills.

Driving this trend is semiconductors. The battle for AI is no longer about faster chips. It is about who defines how AI is built, deployed and paid for, reflecting an inflection point for the industry and the business world.

Pointing the way at CES were the world’s two leading semiconductor firms, Nvidia and AMD. On the surface, their new products and solutions look as though AI compute is fragmenting, not consolidating.

A closer look shows that they are addressing different workloads which demand different architectures. Training giant models, running inference at the edge, powering robots or optimising enterprise workflows each comes with distinct requirements.

Their distinct visions of the AI era will strongly push enterprises to think harder about the trade-offs they need to do. What is clear is that AI strategy and silicon strategy are now inseparable.

AMD: AI everywhere in a heterogenous environment

AMD chair and chief executive Lisa Su delivered CES’ first official keynote, outlining the company’s vision for an AI-powered future that reaches far beyond data centres and research labs.

Calling AI the most important technology of the last 50 years, she said: “It’s already touching every major industry, whether you’re going to talk about health care or science or manufacturing or commerce.”

“We’re just scratching the surface, AI is going to be everywhere over the next few years,” she added. “And most importantly, AI is for everyone.”

AMD’s biggest pitch is integration, that is, tightly integrating CPUs, GPUs, networking and software together to scale AI infrastructure efficiently, she added.

AMD’s latest Helios rack platform, is designed to bring together high-performance computing, advanced accelerators, and networking in a single, scalable platform capable of supporting the next generation of AI models.

It is an open, modular rack design which features a double-wide design and weighs nearly 3,120kg – more than the weight of two cars.

Su also unveiled the Ryzen AI Embedded chips, a new platform to power AI-driven applications at the edge for applications in automotive digital cockpits, smart healthcare and humanoid robotics.

She was joined on stage by Gene.01, a sleek looking humanoid robot by Italian firm Generative Bionics. The robot is equipped with touch sensors, including sensor-embedded shoes that provide tactile feedback.

Su called it “super cool”, highlighting how the same sensor technology can also be adapted for human patients in medical and rehabilitation settings.

The AMD CEO also unveiled a series of chips for the PC, desktop and gaming sectors. The new Ryzen AI 400 Series is aimed at premium ultra-thin and light notebooks and small form-factor desktops. The Pro 400 Series are aimed at AI acceleration and modern security..

They expand the client computing portfolio, bringing expanded AI capabilities, premium gaming performance and commercial-ready features to more systems.

Raising the bar for gaming is the new Ryzen 9850X3D, AMD’s newest and fastest gaming processor. It delivers up to 27 per cent better gaming performance compared to the Intel Core Ultra 9 285K, according to AMD. The new chip has eight high-performance cores and 16 threads, delivering ultra-low latency.

These new products highlight an important message: AMD is betting that many organisations will not want to put all their AI eggs in one basket. As AI costs rise and workloads diversify, enterprises want room to optimise, by workload, by budget and by use case.

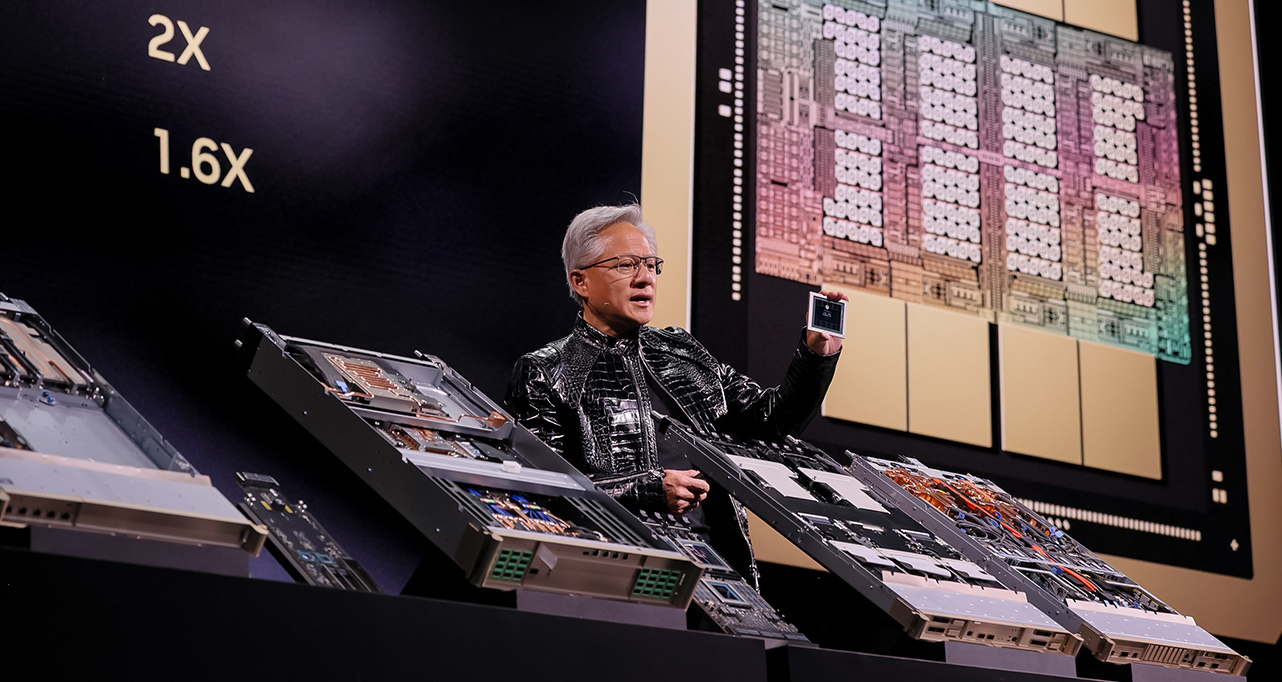

Nvidia’s new Rubin platform

Rival Nvidia chief executive Jensen Huang declared at CES 2026 that AI is scaling into every domain and every device.

“Computing has been fundamentally reshaped as a result of accelerated computing, as a result of artificial intelligence,” he said. “What that means is some US$10 trillion or so of the last decade of computing is now being modernised to this new way of doing computing.”

Two significant products he unveiled were the Rubin architecture that will replace the Blackwell architecture later this year and the Alpamayo, an open reasoning model family for autonomous vehicle development.

The six-chip Rubin platform addresses growing bottlenecks in model training, storage and interconnection platform. It also has a new Vera CPU designed for agentic reasoning.

Currently in production, the Rubin architecture is a significant advance in speed and power efficiency. According to Nvidia, it will operate three and half times faster than the previous Blackwell architecture on model-training tasks and five times faster in inference tasks. The new platform will also support eight times more inference compute per watt.

Rubin’s promise is fewer integration headaches, faster deployment and predictable performance at scale. For companies racing to train large models or run AI-heavy workloads, that is a compelling proposition.

The new products will enable these companies to train trillion-parameter models efficiently at a time when compute power is much in demand. The new products further enhance the tech stack Nvidia has been building.

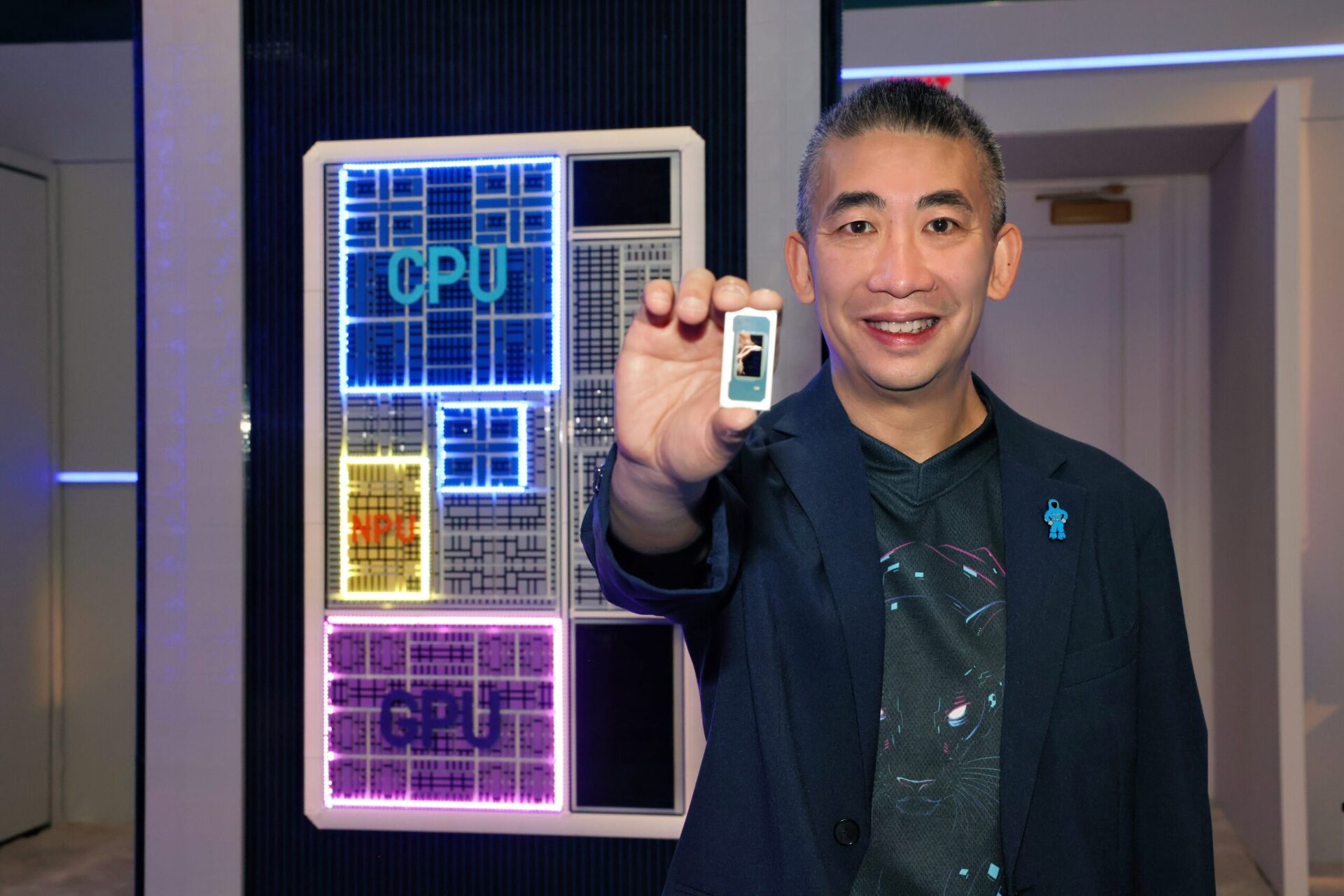

Intel: AI at the edge

Last but not least, Intel, the storied semiconductor company, unveiled the Core Ultra Series 3 silicon, its new AI chip for laptops. Codenamed Panther Lake, it will use Intel’s latest cutting edge 18A manufacturing process for enhanced performance, density and power delivery. It is ramping up production and orders are open.

With this new chip, Intel aims to bake AI acceleration into everyday computing. The new chips will power everything from enterprise PCs and edge servers to industrial systems and telecom infrastructure.

Intel said it would deliver 60 per cent better performance than the prior-generation of chips. Analysts said Intel wants the new chip to bolster its core PC business by improving the non-AI qualities buyers look for like battery life and to boost performance for AI tasks such as coding.