AMD has just unveiled its 4th-gen EPYC processors that promise higher performance for servers delivering today’s digital services, while keeping ownership costs affordable and reducing the power bill.

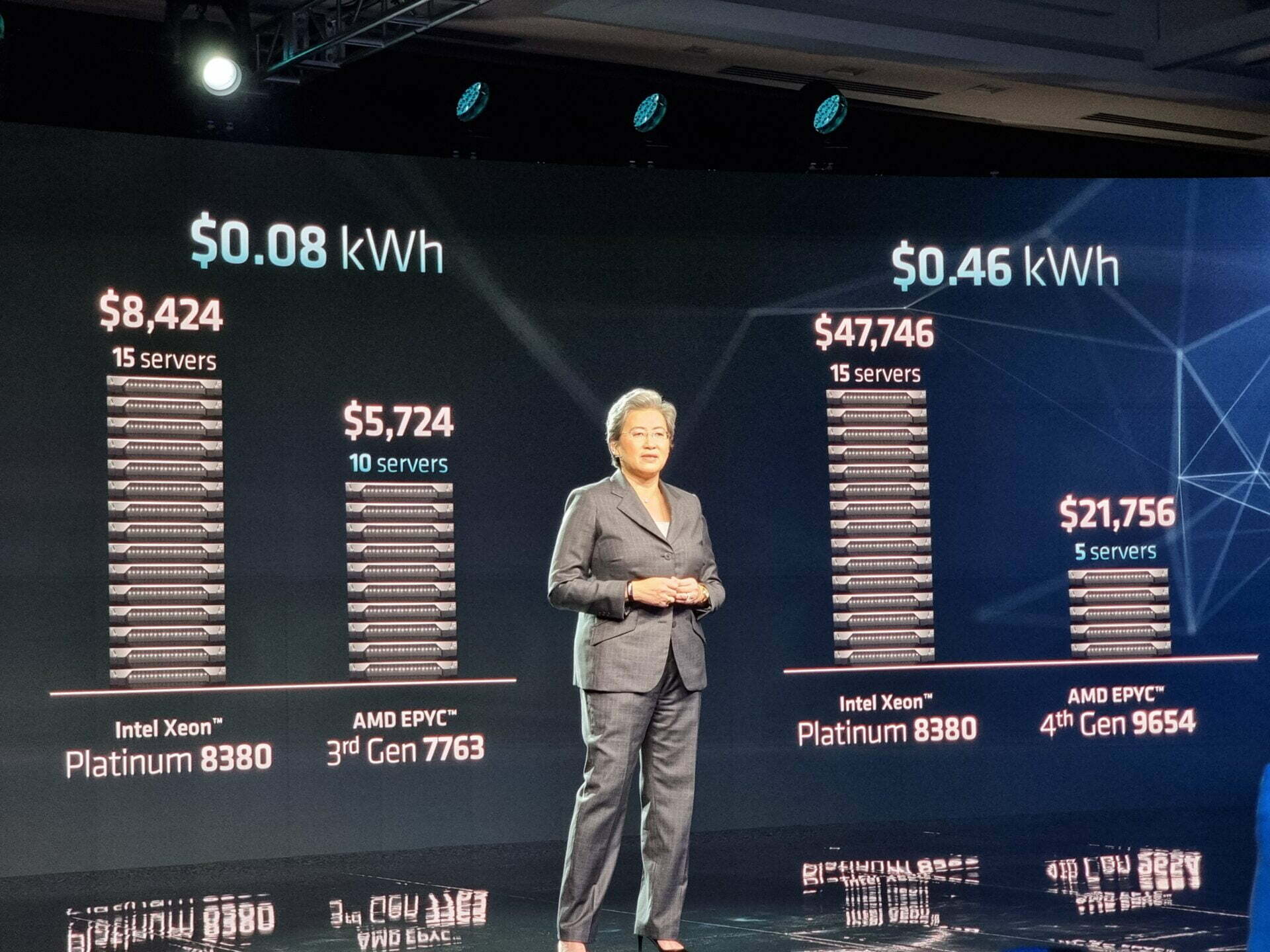

Five servers running AMD’s top-of-line 4th-gen EPYC 9654 processors can equal 15 servers packing Intel’s current 3rd-gen flagship server chip, the Xeon Platinum 8380, in workload tests, the chipmaker said, in an event on November 10 in San Francisco.

The bold claims are a reflection of AMD’s confidence this year, as it became a more valuable company than long-time rival chipmaker Intel.

It helps that AMD is launching the new 4th-gen AMD EPYC processors, codenamed Genoa and built on the Zen 4 microarchitecture, ahead of the competition.

Intel has delayed its 4-gen Xeon Scalable Sapphire Rapids processor refresh to January 2023.

So, AMD partners spanning HPE, Dell, Oracle Cloud Infrastructure and others are now ready with a range of 4th-gen EPYC offerings.

Pulling further ahead

The 4th Gen EPYC processors run on the AMD EPYC 9004 system-on-chip (SoC) platform. Each processor can support up to 12 Zen 4 “Core Complex Dies” (CCDs) of 8 cores each, translating into a maximum possible 96 cores which can be multi-threaded to 192 threads per processor.

The processor is fabricated on a “hybrid 5 + 6nm” platform – While the CCDs are built on Taiwanese semiconductor manufacturer TSMC’s 5nm process node, the I/O core is made on a 6nm process.

The 4th Gen EPYC support 12-channel DDR5 with ECC at up to 4,800MHz speeds, while offering up to 160 PCIe 5.0 lanes, something that Intel will only come to support with the launch of Sapphire Rapids, but with eight channels and 80 lanes respectively.

The processors are Compute Express Link (CXL) 1.1(+) capable, with a specific focus on memory expansion. CXL is an up-and-coming feature that facilitates expansion and pooling of device memory via the PCI Express bus, instead of the typical dual inline memory module (DIMM) interface.

Architecture-wise, the Zen 4 microarchitecture has doubled its L2 cache to 1MB per core (L3 cache remains at 32MB per core), and increased its micro-op cache (of frequently used instructions so they bypass the decoder) from 4,000 to 6,750 operations.

The 4th-gen EPYC also expands 512-bit Advanced Vector Extensions (AVX-512) support – instruction sets which accelerates workload processing – for use cases relating to artificial intelligence, such as BFloat16 and Vector Neural Network Instructions (VNNI), among others.

The processor will better serve confidential computing workloads with an enhanced “Infinity Guard” feature set that supports 256-bit AES-XTS encryption, SEV-SNP key extensions, and double the number of unique encryption keys over the 3rd-gen EPYC (each used to secure every virtualisation instance) to 1,006.

Critically, the platform refresh necessitates a socket change that will see the 4th-gen EPYC move to Socket SP5 from SP3, the latter of which has been in use since 2017 with its Zen, Zen 2, and Zen 3-based EPYC server processors.

Leadership across multiple uses

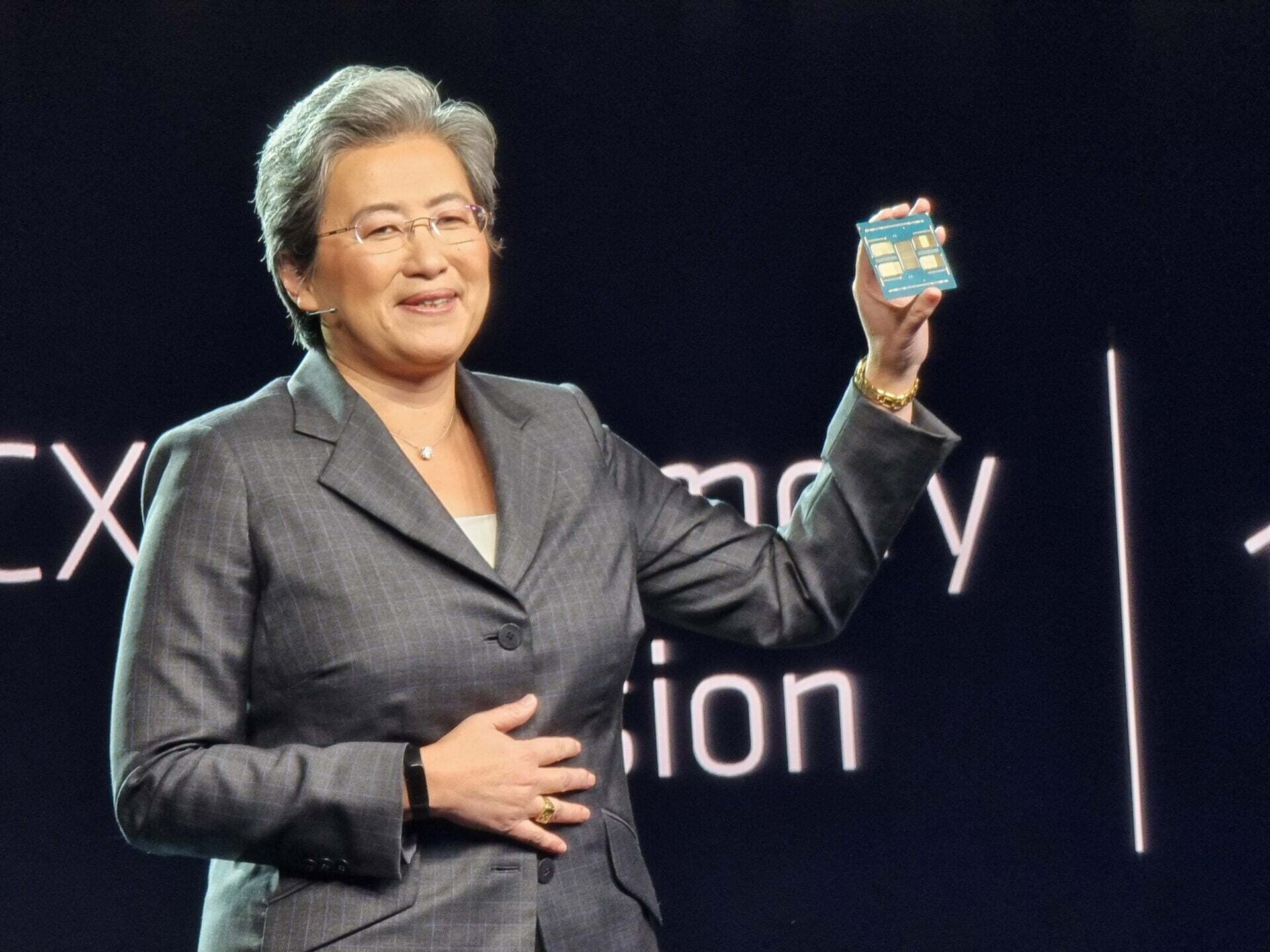

At the event, AMD executives, such as chair and CEO, Dr Lisa Su, took the audience through benchmark tests across floating point, integer, virtualisation, database query workloads.

This was meant to illustrate how the 4th-gen EPYC 9654 not only reinforced its lead over Intel since the 2nd-gen EPYC, but consistently outperformed the Xeon Platinum 8380 by a magnitude of two to three times.

Integer performance is useful for cloud computing applications such as search and as-a-service provision (for instance, those based on Microsoft Azure and Amazon Web Services), while floating point operations are commonly found in high performance computing use cases such as machine learning and simulation workloads.

At an architecture pre-brief held a day before the official launch, AMD corporate vice-president for EPYC Product Management Ram Peddibhotla explained that since most businesses design their server setup around planned workloads, fewer servers immediately translate into lower capital expenditure on accompanying hardware such as memory, network, and/or storage modules.

A more compact setup also means lower energy and cooling requirements, and a denser outfit that can do more with less, he added.

In the earlier example, a user opting for an AMD EPYC 9654 setup can potentially extract the same performance as a Xeon Platinum 8380 setup with up to 67 per cent fewer servers and 50 per cent lower power draw, leading to a reduction of up to 25 metric tonnes in carbon output.

Software cost, network and storage power external to the server are not included in the analysis.

When quizzed on the competitive advantage of the 4th-gen EPYC against the Intel 3rd-gen Xeon Scalable, Peddibhotla said the Genoa series is optimised for all general-purpose server computing use cases, and not specific, memory-intensive and sensitive applications that benefit from High Bandwidth Memory 2e (HBM2e).

He will also not be drawn into comparisons with the Sapphire Rapids, preferring to keep direct comparisons to products available on the market.

Available today

For all the performance they promise, the new AMD processors are only as strong as the products that put them through their paces to serve users.

A slew of partners including Microsoft Azure, Oracle Cloud Infrastructure, HPE, Dell, Google Cloud, Lenovo, and others were on stage to unveil many products ready to launch immediately – no doubt a jibe at its competitor that has yet to reach the starting line – whilst rattling off some of the over-300 world performance records set by the Genoa chips, in workloads as granular as those for SAP Sales and Distribution modules.

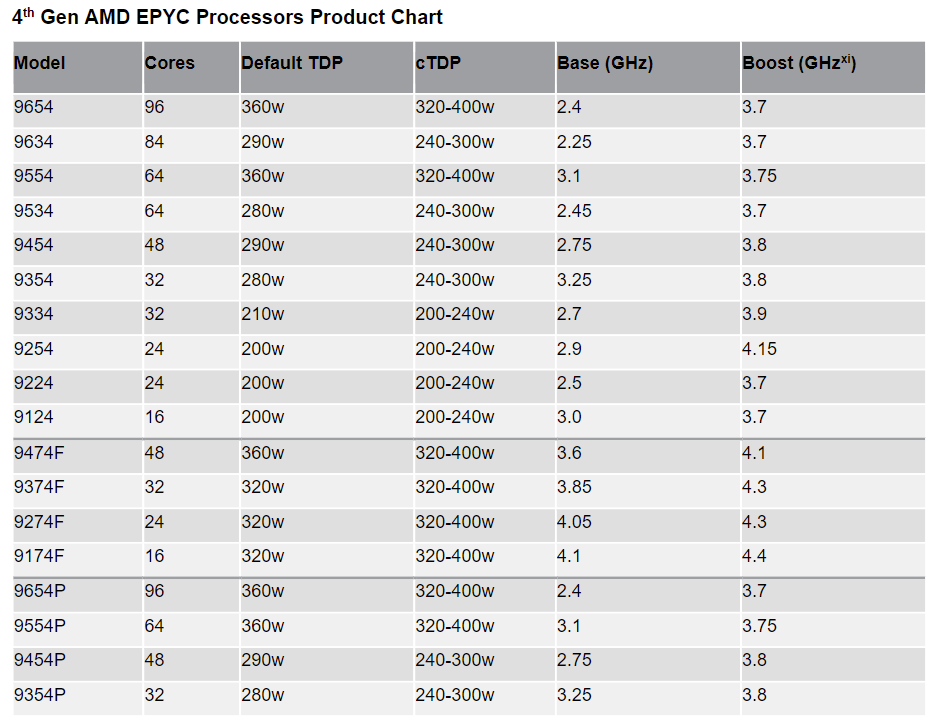

The 4th-gen EPYC processors are available across various stock-keeping units (SKUs) featuring 16 to 96 cores, as a core performance part (fewer, better performing cores mapped to fixed amount of cache), a core density part (more cores mapped to fixed amount of cache) or a balanced part.

Beyond the Genoa, AMD also revealed plans for products also on the 4th-gen EPYC platform but optimised for other workloads.

They include Bergamo for cloud native applications and Genoa-X for technical computing due 1H 2023, and Siena for telco-edge use cases in 2H 2023.